Pdf:APRJA Minor Tech: Difference between revisions

No edit summary |

Tag: Rollback |

||

| (115 intermediate revisions by 3 users not shown) | |||

| Line 1: | Line 1: | ||

<div id="cover"> | __NOTOC__ | ||

<div id="cover"> | |||

<div | <div id="cover-title"> | ||

A Peer-Reviewed Journal About<br><br> | |||

< | MINOR TECH | ||

</div> | </div> | ||

< | <div class="authors"> | ||

Camille Crichlow<br> | |||

Teodora Sinziana Fartan<br> | |||

Susanne Förster<br> | |||

Inte Gloerich<br> | |||

Mara Karagianni<br> | |||

Jung-Ah Kim<br> | |||

Freja Kir<br> | |||

Inga Luchs<br> | |||

Alasdair Milne<br> | |||

Shusha Niederberger<br> | |||

< | Jack Wilson<br> | ||

nate wessalowski<br> | |||

xenodata co-operative (Alexandra Anikina <br> | |||

& Yasemin Keskintepe)<br> | |||

Sandy Di Yu<br> | |||

Christian Ulrik Andersen <br> | Christian Ulrik Andersen <br> | ||

& Geoff Cox (Eds.) | & Geoff Cox (Eds.) | ||

</div> | |||

<div id="aprja-details"> | |||

<div id="aprja-logo"> | |||

[[File:APRJA.svg|frame]] | |||

</div> | </div> | ||

<div id="volume-issue-year"> | |||

Volume 12, Issue 1, 2023<br> | |||

ISSN 2245-7755 | |||

</div> | |||

<div class=" | </div> | ||

</div> | |||

<div id="toc"> | |||

Contents | |||

* '''Christian Ulrik Andersen & Geoff Cox'''<br>[[#Editorial:Toward_a_Minor_Tech|Editorial: Toward a Minor Tech]] | |||

* '''Manetta Berends & Simon Browne'''<br>[[#About wiki-to-print|About wiki-to-print]] | |||

* '''Camille Crichlow'''<br>[[#Scaling_Up,_Scaling_Down: Racialism_in_the_Age_of_Big_Data|Scaling Up, Scaling Down: Racialism in the Age of Big Data]] | |||

* '''Jack Wilson'''<br>[[#Minor_Tech_and_Counter-revolution:Tactics,_Infrastructures,_QAnon|Minor Tech and Counter-revolution: Tactics, Infrastructures, QAnon]] | |||

* '''Teodora Sinziana Fartan'''<br>[[#Rendering_Post-Anthropocentric_Visions:Worlding_As_a_Practice_of_Resistance|Rendering Post-Anthropocentric Visions: Worlding As a Practice of Resistance]] | |||

* '''Jung-Ah Kim'''<br>[[#Weaving_and_Computation:Can_Traditional_Korean_Craft_Teach_Us_Something?|Weaving and Computation: Can Traditional Korean Craft Teach Us Something?]] | |||

* '''Freja Kir'''<br>[[#Glitchy,_Caring,_Tactical:_A_Relational_Study_Between_Artistic_Tactics_and_Minor_Tech|Glitchy, Caring, Tactical: A Relational Study Between Artistic Tactics and Minor Tech]] | |||

* '''xenodata co-operative<br>(Alexandra Anikina, Yasemin Keskintepe)'''<br>[[#Spirit_Tactics:(Techno)magic_as_Epistemic_Practice_in_Media_Arts_and_Resistant_Tech|Spirit Tactics: (Techno)magic as Epistemic Practice in Media Arts and Resistant Tech]] | |||

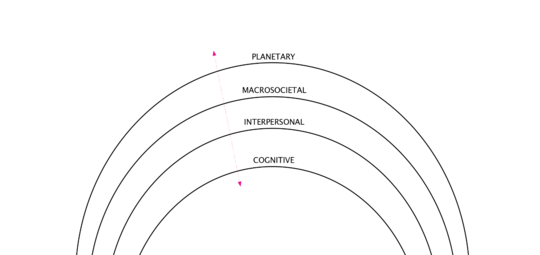

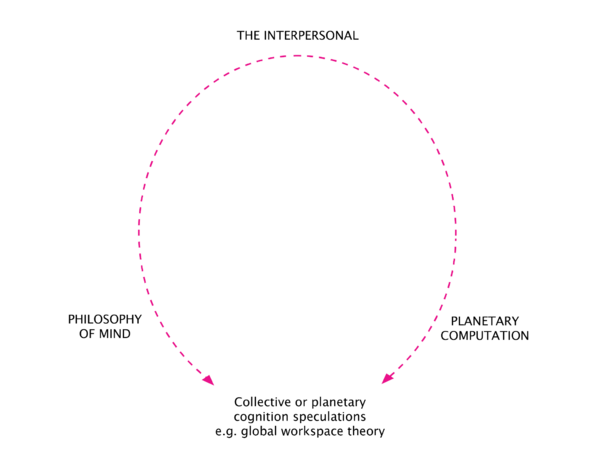

* '''Alasdair Milne'''<br>[[#Lurking_in_the_Gap_between_Philosophy_of_Mind_and_the_Planetary|Lurking in the Gap between Philosophy of Mind and the Planetary]] | |||

* '''Susanne Förster'''<br>[[#The_Bigger_the_Better?!The_Size_of_Language_Models_and_the_Dispute_over_Alternative_Architectures|The Bigger the Better?! The Size of Language Models and the Dispute over Alternative Architectures]] | |||

* '''Inga Luchs'''<br>[[#AI_for_All?Challenging_the_Democratization_of_Machine_Learning|AI for All? Challenging the Democratization of Machine Learning]] | |||

* '''Sandy Di Yu'''<br>[[#Time_Enclosures_and_the_Scales_of_Optimisation:_From_Imperial_Temporality_to_the_Digital_Milieu|Time Enclosures and the Scales of Optimisation: From Imperial Temporality to the Digital Milieu]] | |||

* '''Inte Gloerich'''<br>[[#Towards_DAOs_of_Difference:_Reading_Blockchain_Through_the_Decolonial_Thought_of_Sylvia_Wynter|Towards DAOs of Difference: Reading Blockchain Through the Decolonial Thought of Sylvia Wynter]] | |||

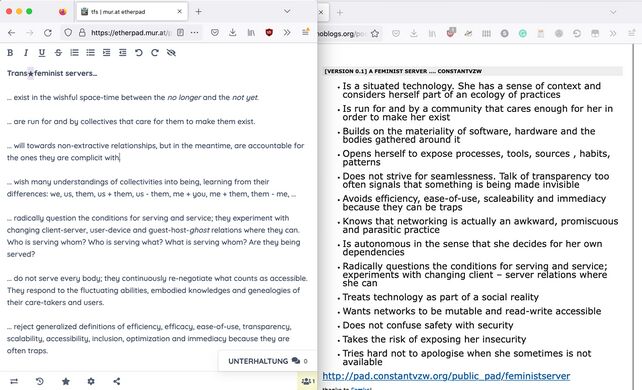

* '''Shusha Niederberger'''<br>[[#Calling_the_User:Interpellation_and_Narration_of_User_Subjectivity_in_Mastodon_and_Trans*Feminist_Servers|Calling the User: Interpellation and Narration of User Subjectivity in Mastodon and ''Trans*Feminist Servers'']] | |||

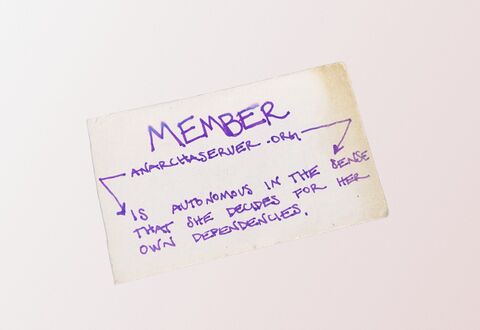

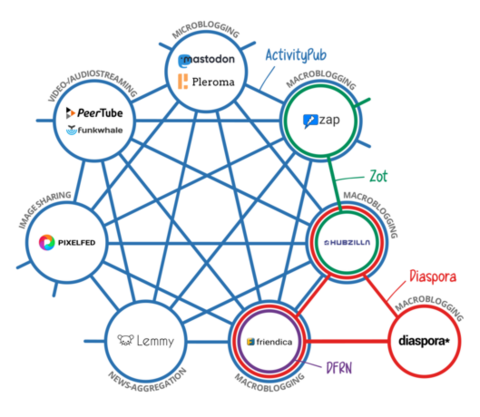

* '''nate wessalowski & Mara Karagianni'''<br>[[#From_Feminist_Servers_to_Feminist_Federation|From Feminist Servers to Feminist Federation]] | |||

* [[#Contributors|Contributors]] | |||

</div> | |||

<div id="colophon"> | |||

{{ Toward a Minor Tech:APRJA-Colophon }} | |||

</div> | |||

<div class="item" id="editorial"> | |||

{{ Toward a Minor Tech:APRJA-Editorial }} | |||

</div> | |||

<div class="item" id="wiki-to-print"> | |||

{{ Toward a Minor Tech:APRJA-Wiki-to-print }} | |||

</div> | |||

<div class="item"> | |||

{{ Toward_a_Minor_Tech:CRICHLOW5000 }} | {{ Toward_a_Minor_Tech:CRICHLOW5000 }} | ||

</div> | </div> | ||

<div class=" | <div class="item"> | ||

{{ | {{ Toward a Minor Tech:Wilson5000 }} | ||

</div> | </div> | ||

<div class=" | <div class="item"> | ||

{{ Toward a Minor Tech: | {{ Toward a Minor Tech:Fartan }} | ||

</div> | </div> | ||

<div class=" | <div class="item"> | ||

{{ Toward a Minor Tech:Kim5000 }} | {{ Toward a Minor Tech:Kim5000 }} | ||

</div> | </div> | ||

<div class=" | <div class="item"> | ||

{{ Toward a Minor Tech:Kir5000 }} | {{ Toward a Minor Tech:Kir5000 }} | ||

</div> | </div> | ||

<div class=" | <div class="item"> | ||

{{ Toward a Minor Tech:AnikinaKeskintepe5000 }} | |||

</div> | |||

<div class="item"> | |||

{{ Toward a Minor Tech:Milne5000 }} | |||

</div> | |||

<div class="item"> | |||

{{ Toward_a_Minor_Tech:Foerster5000 }} | |||

</div> | |||

<div class="item"> | |||

{{ Toward a Minor Tech:Luchs5000 }} | {{ Toward a Minor Tech:Luchs5000 }} | ||

</div> | </div> | ||

<div class=" | <div class="item"> | ||

{{ Toward a Minor Tech: | {{ Toward a Minor Tech:Toward a Minor Tech:Yu-5000 }} | ||

</div> | |||

<div class="item"> | |||

{{ Toward a Minor Tech:Gloerich5000 }} | |||

</div> | </div> | ||

<div class=" | <div class="item"> | ||

{{ Toward a Minor Tech:Niederberger5000 }} | {{ Toward a Minor Tech:Niederberger5000 }} | ||

</div> | </div> | ||

<div class=" | <div class="item"> | ||

{{ Toward a Minor Tech: | {{ Toward a Minor Tech:FeministServers5000 }} | ||

</div> | </div> | ||

<div | <div id="contributors"> | ||

{{ Toward a Minor Tech: | {{ Toward a Minor Tech:APRJA-Contributors }} | ||

</div> | </div> | ||

Latest revision as of 12:14, 5 June 2024

Contents

- Christian Ulrik Andersen & Geoff Cox

Editorial: Toward a Minor Tech - Manetta Berends & Simon Browne

About wiki-to-print - Camille Crichlow

Scaling Up, Scaling Down: Racialism in the Age of Big Data - Jack Wilson

Minor Tech and Counter-revolution: Tactics, Infrastructures, QAnon - Teodora Sinziana Fartan

Rendering Post-Anthropocentric Visions: Worlding As a Practice of Resistance - Jung-Ah Kim

Weaving and Computation: Can Traditional Korean Craft Teach Us Something? - Freja Kir

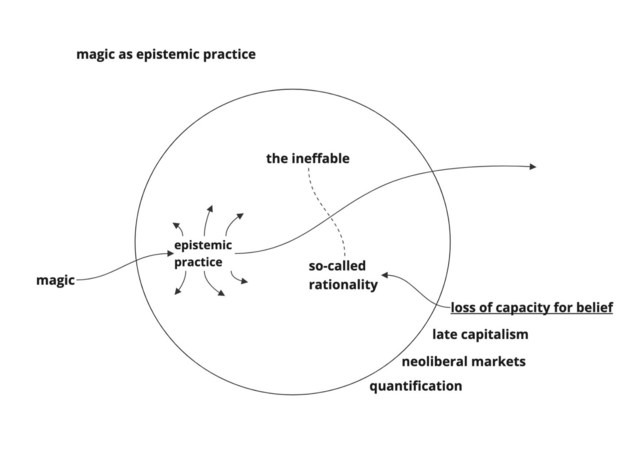

Glitchy, Caring, Tactical: A Relational Study Between Artistic Tactics and Minor Tech - xenodata co-operative

(Alexandra Anikina, Yasemin Keskintepe)

Spirit Tactics: (Techno)magic as Epistemic Practice in Media Arts and Resistant Tech - Alasdair Milne

Lurking in the Gap between Philosophy of Mind and the Planetary - Susanne Förster

The Bigger the Better?! The Size of Language Models and the Dispute over Alternative Architectures - Inga Luchs

AI for All? Challenging the Democratization of Machine Learning - Sandy Di Yu

Time Enclosures and the Scales of Optimisation: From Imperial Temporality to the Digital Milieu - Inte Gloerich

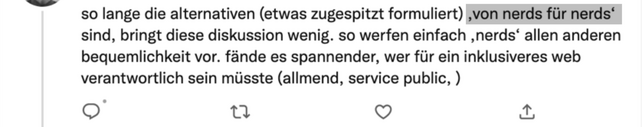

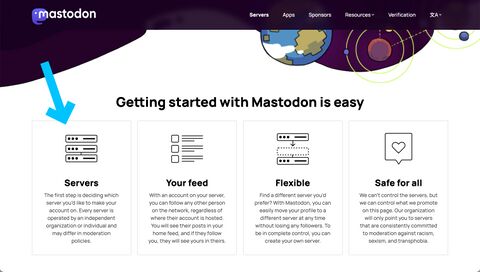

Towards DAOs of Difference: Reading Blockchain Through the Decolonial Thought of Sylvia Wynter - Shusha Niederberger

Calling the User: Interpellation and Narration of User Subjectivity in Mastodon and Trans*Feminist Servers - nate wessalowski & Mara Karagianni

From Feminist Servers to Feminist Federation - Contributors

A Peer-Reviewed Journal About_

ISSN: 2245-7755

Editors: Christian Ulrik Andersen and Geoff Cox

Published by: Digital Aesthetics Research Centre, Aarhus University

Design: Manetta Berends and Simon Browne (CC)

Fonts: Happy Times at the IKOB by Lucas Le Bihan, AllCon by Simon Browne

CC license: ‘Attribution-NonCommercial-ShareAlike’

Christian Ulrik Andersen

& Geoff Cox

Editorial:

Toward a Minor Tech

Editorial: Toward a Minor Tech

The three characteristics of minor tech are the deterritorialization of technology, the connection of the individual to a political immediacy, and the collective arrangement of its operations. Which amounts to this: that “minor” no longer characterises certain technologies, but describes the revolutionary conditions of any technology within what we call big (or ubiquitous). ––

Deleuze and Guattari, Kafka:Toward a MinorLiteratureTech (18)

This journal issue addresses what we are calling "minor tech" making reference to Gilles Deleuze and Félix Guattari's essay "Kafka: Toward a Minor Literature" (written in 1975). They propose the concept of minor literature as opposed to great or established literature — the use of a major language that subverts it from within. "Becoming-minitorian" in this sense — to use a related concept from A Thousand Plateaus — involves the recognition of particular instances of power and the ability of the repressed minority to gain some degree of autonomy of expression. "Expression must break forms, encourage ruptures and new sproutings", as Deleuze and Guattari put it (28).

A characteristic of minor technologies is that everything in them is politics.

For our purpose, this notion of the minor is a relative position to major (or big) tech. This also partly invokes the issue of scale, the theme of the 2023 edition of transmediale festival. In the call, the organizers state that the festival is an exploration of “how technological scale sets conditions for relations, feelings, democratic processes, and infrastructures.” (https://2023.transmediale.de/). The importance of scale becomes apparent in the massification of images and texts on the internet, and the application of various scalar machine techniques that try to make things comprehensible for human and non-human readers alike; big computing begets big data. However, “we have a problem with scale”, as Anna Lowenhaupt Tsing puts it (37), in its connection to modernist master narratives that organise life on an increasingly globalised scale (the 'bigness' of capitalism). Alternatively, she writes, we need to “notice” the small details and not assume that these need to be scaled up to be effective, as is the orthodoxy of research. In technical fields, not least machine learning, this problem with scale has severe consequences, with ensuing discrimination and environmental damage.

A minor technology is that which a minority constructs within the grammar of technology.

Small tech on the other hand operates at human scale (more peer to peer than server-client) and "stutters and stammers the major" (to use the words of Deleuze and Guattari once more). More pragmatically, as artist-researcher Marloes de Valk puts it in the Damaged Earth Catalog: “Small technology, smallnet and smolnet are associated with communities using alternative network infrastructures, delinking from the commercial Internet.” Further issues that arise from scale question the paradigms of 'big computing'; for instance, the dynamics between big data and small technology, attentive to what Cathy Park Hong calls “minor feelings” (that derive from racial and economic discrimination in society); how to bring together new material and minoritarian cultural assemblages between humans and nonhumans, ecology, and technological infrastructure and systems; or, how this relates to minor practices and collective action. Although, ultimately, notions of big or small become less important, and everything is to be considered political (or micropolitical) if we follow our conceptual trajectory.

As such, this publication sets out to question some of the major ideals of technology and its problems of bigness, extending it to follow the three main characteristics identified in Deleuze and Guattari's essay, namely deterritorialization, political immediacy, and collective value. We would argue that these remain pertinent concepts: as a means to deterritorialize from repressive conceptual, social, affective, linguistic and technical regimes, and transform the conditions through which technology can become a "collective machine of expression" (Deleuze and Guattari 18).

A characteristic of a minor technology is that in it everything takes on a collective value.

Following a process of open exchanges online and a three-day in-person research workshop in London, at London South Bank University and King's College London, this edition of APRJA brings together researchers who think through the potentials of 'the minor', and what we are referring to as minor (or minority) tech. As stated, this is not a problem of scale alone (although many of the contributions take this approach) but of politics – how minorities struggle for autonomy of expression. Together, authors address minor tech through its relation to a range of pressing concerns, exploring: racism in predictive policing technology; QAnon as an assemblage of ‘minor techs’; speculative practices of 'worlding'; parallels between computing and the craft of weaving; artistic tactics in opposition to large-scale digital platforms; attempts to decentre Western epistemologies through spirit tactics and (techno)magic; parallels between planetary-scale computation and a philosophy of mind; problems associated with generative large language models; inflated claims of democratizing machine learning; processes of optimisation and our changing experience of time; connections between DAOs, countercultural blockchain and decoloniality; user subjectivity in Mastodon and the Trans*Feminist Servers project; and the final word is with Trans*Feminist Servers whose practice exemplifies the collective value of minor tech.

A minor technology is an intensive utilisation of technology — it utilises the inner tensions of technology.

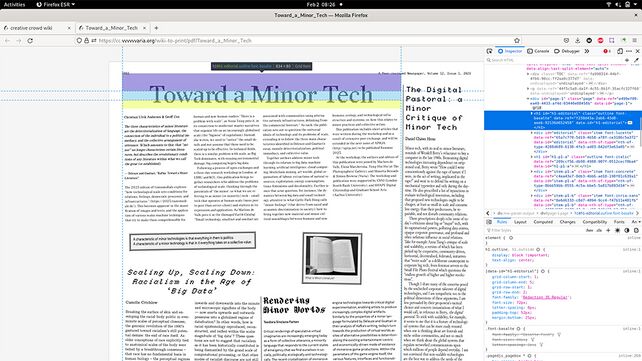

This publication (APRJA) further develops short articles that were first written during the workshop at speed, published as a newspaper and distributed at transmediale (the PDF can be downloaded from here). As well as exploring our shared interest and understanding of minor tech, our approach has been to implement these principles in practice. Consequently the publication has been produced using wiki-to-print tools, based on MediaWiki software, Paged Media CSS techniques and the JavaScript library Paged.js, which renders the PDF. In other words, no Adobe products have been used. As such the divisions of labour between writers, editors, designers, software developers have been brought closer together in ways that challenge some of the normative paradigms of research process and publication, in keeping with the applied ethics of minor tech.

— Aarhus/London, June 2023

Acknowledgements

Thanks to all contributors and initial workshop participants, including Roel Roscam Abbing, Mateus Domingos, Edoardo Lomi & Macon Holt, Anna Mladentseva, marthe van dessel (alias: ooooo), mika motskobili (alias: vo ezn), ai carmela netîrk, and to Manetta Berends and Simon Browne (Varia) for their design work and the development of the wiki-to-print platform. Additional thanks to Marloes de Valk, Elena Marchevska, Tung-Hui Hu, who contributed to the events in London, and Daniel Chávez Heras, Gabriel Menotti, Søren Pold, Winnie Soon, and Magda Tyzlik-Carver who supported the workshop as respondents, and finally to the anonymous peer reviewers who helped to sharpen the essays. The workshop and publication were supported by CSNI (London South Bank University), SHAPE Digital Citizenship (Aarhus University), and Graduate School of Arts (Aarhus University).

Works cited:

Deleuze, Gilles, and Félix Guattari. Kafka: Toward a Minor Literature [1975], Translated by Dana Polan. University of Minnesota Press, 1986.

———. A Thousand Plateaus: Capitalism and Schizophrenia. Translated by Brian Massumi. University of Minnesota Press, 1987.

Hong, Cathy Park. Minor Feelings: An Asian American Reckoning. One World, 2020.

Tsing, Anna Lowenhaupt. The Mushroom at the End of the World: On the Possibility of Life in Capitalist Ruins. Princeton University Press, 2015.

de Valk, Marloes. Damaged Earth Catalogue, 2022, https://damaged.bleu255.com/Small_Technology/. Accessed 22 Jan, 2023.

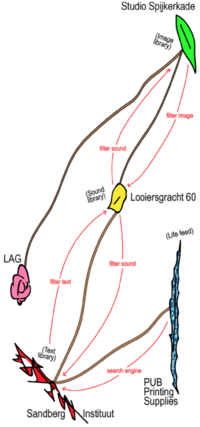

Manetta Berends & Simon Browne

About wiki-to-print

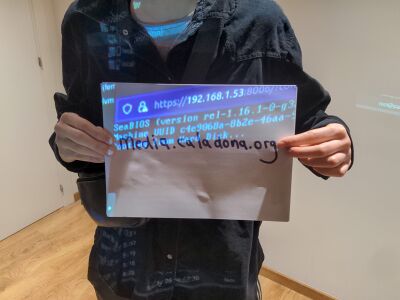

This journal is made with wiki-to-print, a collective publishing environment based on MediaWiki software[1], Paged Media CSS[2] techniques and the JavaScript library Paged.js[3], which renders a preview of the PDF in the browser. Using wiki-to-print allows us to work shoulder-to-shoulder as collaborative writers, editors, designers, developers, in a non-linear publishing workflow where design and content unfolds at the same time, allowing the one to shape the other.

Following the idea of "boilerplate code" which is written to be reused, we like to think of wiki-to-print as a boilerplate as well, instead of thinking of it as a product, platform or tool. The code that is running in the background is a version of previous wiki-printing instances, including:

- the work on the Diversions[4] publications by Constant[5] and OSP[6]

- the book Volumetric Regimes[7] by Possible Bodies[8] and Manetta Berends[9]

- TITiPI's[10] wiki-to-pdf environments[11] by Martino Morandi

- Hackers and Designers'[12] version wiki2print[13] that was produced for the book Making Matters[14]

So, wiki-to-print/wiki-to-pdf/wiki2print is not standalone, but part of a continuum of projects that see software as something to learn from, adapt, transform and change. The code that is used for making this journal is released as yet another version of this network of connected practices[15].

This wiki-to-print is hosted at CC[16] (creative crowds). While moving from cloud to crowds, CC is a thinking device for us how to hand over ways of working and share a space for publishing experiments with others.

Notes

- ↑ https://www.mediawiki.org

- ↑ https://www.w3.org/TR/css-page-3/

- ↑ https://pagedjs.org

- ↑ https://diversions.constantvzw.org

- ↑ https://constantvzw.org

- ↑ https://osp.kitchen

- ↑ http://data-browser.net/db08.html + https://volumetricregimes.xyz

- ↑ https://possiblebodies.constantvzw.org

- ↑ https://manettaberends.nl

- ↑ http://titipi.org

- ↑ https://titipi.org/wiki/index.php/Wiki-to-pdf

- ↑ https://hackersanddesigners.nl

- ↑ https://github.com/hackersanddesigners/wiki2print

- ↑ https://hackersanddesigners.nl/s/Publishing/p/Making_Matters._A_Vocabulary_of_Collective_Arts

- ↑ https://git.vvvvvvaria.org/CC/wiki-to-print

- ↑ https://cc.vvvvvvaria.org

Camille Crichlow

Scaling Up, Scaling Down:

Racialism in the Age of Big Data

Scaling Up, Scaling Down: Racialism in the Age of Big Data

Abstract

This article explores the shifting perceptual scales of racial epistemology and anti-blackness in predictive policing technology. Following Paul Gilroy, I argue that the historical production of racism and anti-blackness has always been deeply entwined with questions of scale and perception. Where racialisation was once bound to the anatomical scale of the body, Thao Than and Scott Wark’s conceptualisation of “racial formations as data formations” inform insights into the ways in which “race”, or its 21st century successor, is increasingly being produced as a cultivation of post-visual, data-driven abstractions. I build upon analysis of this phenomena in the context of predictive policing, where analytically derived “patrol zones” produce virtual barriers that divide civilian from suspect. Beyond a “garbage in, garbage out” critique, I explore the ways in which predictive policing instils racialisation as an epiphenomenon of data-generated proxies. By way of conclusion, I analyse American Artist’s 21-minute video installation 2015 (2019), which depicts the point of view of a police patrol car equipped with a predictive policing device, to parse the scales upon which algorithmic regimes of racial domination are produced and resisted.

Introduction

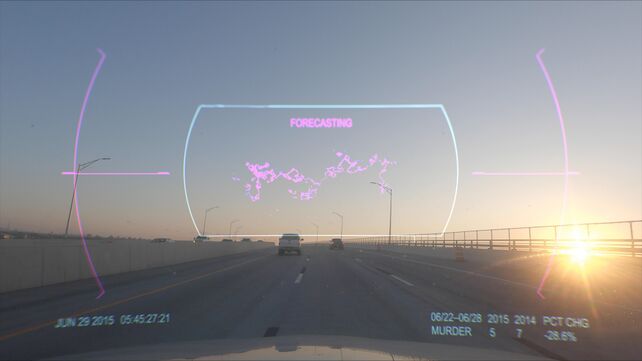

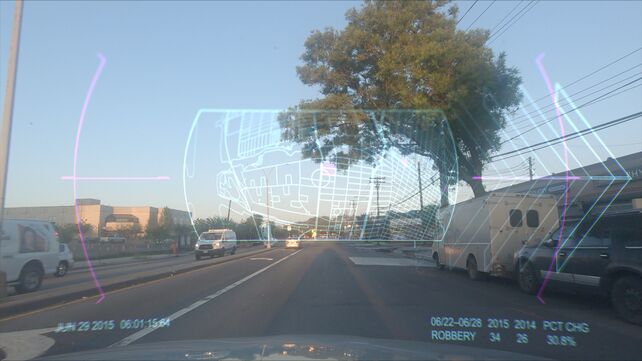

2015, a 21-minute video installation shown at American Artist’s 2019 multimedia solo exhibition My Blue Window at the Queen’s Museum in New York City, assumes the point of view of a dashboard surveillance camera positioned on the hood of a police car cruising through Brooklyn’s side streets and motorways. Superimposed on the vehicle’s front windshield, a continuous flow of statistical data registers the frequency of crime between 2015 and the preceding year: “Murder, 2015: 5, 2014: 7. Percent change: -28.6%”. Below a shifting animation of neon pink clouds, the word “forecasting” appears as the sun rises on the freeway. The vehicle suddenly changes course, veering towards an exit guided by a series of blinking ‘hot spots’ identified on the screen’s navigation grid. Over the deafening din of a police siren, the car races towards its analytically derived patrol zone. The movement of the camera slows to a stop on an abandoned street as the words “Crime Deterred” repetitively pulse across the screen. This narrative arc circuitously structures the filmic point of view of a predictive policing device.

In tandem with American Artist’s broader multimedia oeuvre, 2015 similarly operates at historical intersections of race, technology, and knowledge production. Their legal name change to American Artist in 2013 suggests a purposeful play with ambivalence. One that foregrounds the visibility and erasure of black art practice, asserting blackness as descriptive of an American artist, while simultaneously signalling anonymity to evade the surveillant logics of virtual spaces. Across their multimedia works forms of cultural critique stage the relation between blackness and power while addressing histories of network culture. Foregrounding analytic means through which data-processing and algorithms augment and amplify racial violence against black people in predictive policing technology, American Artist’s 2015 interweaves fictional narrative and coded documentary-like footage to construct a unique experimental means to invite rumination on racialised spaces and bodies and their assigned “truths” in our surveillance culture.

As large-scale automated data processing entrenches racial inequalities through processes indiscernible to the human eye, 2015 plays with scale as response. Following Joshua DiCaglio, I invoke scale here as a mechanism of observation that establishes “a reference point for domains of experience and interaction” (3). Relatedly, scale structures the relationship between the body and its abstract signifiers, between identity and its lived outcomes. As sociologist and cultural studies scholar Paul Gilroy observes, race has always been a technology of scale: a tool to define the minute, miniscule, microscopic signifiers of the human against an imagined nonhuman ‘other’. In the 21st century, however, racialisation finds novel lines of emergence in evolving technological formats less constrained by the perceptual and scalar codes of a former racial era. No doubt, residual patterns of racialisation at the scale of the individual body remain entrenched in everyday experience. Here, however, I adopt a different orientation, one that specifically examines the less considered role of data-driven technologies that increasingly inscribe racialisation as a large-scale function of datafication.

Predictive policing technology relies on the accumulation of data to construct zones of suspicion through which racialised bodies are disproportionately rendered hyper-visible and subject to violence (Brayne; Chun). Indeed, predictive analytics range across a wide spectrum of sociality. Health care algorithms employed to predict and rank patient care, favour white patients over black (Obermeyer) and automated welfare eligibility calculations keep the racialised poor from accessing state-funded resources, for example. (Rao; Toos). Relatedly, credit-market algorithms widen already heightened racial inequalities in home ownership (Bhutta et. al). While racial categories are not explicitly coded within the classificatory techniques of analytic technologies, large-scale automated data processing often produce racialising outputs that, at first glance, appear neutral.

Informed by the “creeping” role of prediction and subsequent “zones of suspicion,” I consider how racial epistemology is actively reconstructed and reified within the scalar magnitude of “big data”. This article will focus on racialisation as it is bound up in the historical production of blackness in the American context, though I will touch on the ways in which big data is reframing the categories upon which former racial classifications rest more broadly. Following Paul Gilroy’s historical periodisation of racism as a scalar project of the body that moves simultaneously inwards and downwards towards molecular scales of corporeal visibility, I ask how “big data” now exerts upwards and outwards pressures into a globalised regime of datafication, particularly in the context of predictive policing technology. Drawing from Thao Than and Scott Wark’s conception of racial formations as data formation, that is, “modes of classification that operate through proxies and abstractions and that figure racialized bodies not as single, coherent subjects, but as shifting clusters of data” (1), I explore the stakes and possibilities for dismantling racialism when the body is no longer its singular referent. To do this, I build upon analysis of this phenomena in the context of predictive policing, where analytically derived “patrol zones” produce virtual barriers that that map new categories of human difference through statistical inferences of risk. I conclude by returning to analysis of American Artist’s 2015 as an example of emergent artistic intervention that reframes the scales upon which algorithmic regimes of domination are being produced and resisted.

The scales of Euclidean anatomy

The story of racism, as Paul Gilroy tells it, moves simultaneously inwards and downwards into the contours of the human body. The onset of modernity – defined by early colonial contact with indigenous peoples and the expansion of European empires, the trans-Atlantic slave trade, and the emergence of enlightenment thought – saw the evolution of a thread of natural scientific thinking centered around taxonomical hierarchies of human anatomy. 18th century naturalist Carl Linnaeus’s major classificatory work, Systema Naturae (1735), is widely recognised as the most influential taxonomic method that shaped and informed racist differentiations well into the nineteenth century and beyond. Linnaeus’s framework did not yet mark a turn towards biological hierarchisation of racial types. Nevertheless, it inaugurated a new epoch of race science that would collapse and order human variation into several fixed and rigid phenotypic prototypes. By the onset of the 19th century, the racialised body took on new meaning as the terminology of race slid from a polysemous semantic to a narrower signification of hereditary, biological determinism. In this shift from natural history to the biological sciences, Gilroy notes a change in the “modes and meanings of the visual and the visible”, and thus, the emergence of a new kind of racial scale; what he terms the scale of comparative or Euclidean anatomy (844). This shift in scalar perceptuality is defined by “distinctive ways of looking, enumerating, measuring, dissecting, and evaluating – a trend that could only move further inwards and downwards under the surface of the skin (844). By the middle of the 19th century, for example, the science of physiognomy, phrenology and comparative anatomy had encoded racial hierarchies within the physiological semiotics of skulls, limbs, and bones. By the early 20th century, the eugenics movement pushed the science of racial discourse to ever smaller scopic regimes. Even the microscopic secrets of blood became subject to racial scrutiny through the language of genetics and heredity.

Now twenty years into the 21st century, our perceptual regime has been fundamentally altered by exponential advancements in digital technology. Developments across computational, biological, and analytic sciences produce new forms of perceptual scale, and with it, as Gilroy suggests, open consideration for envisioning the end of race as we know it. Writing in the late 1990’s, Gilroy observed how technical advancements in imaging technologies, such as the nuclear magnetic resonance spectroscope [NMR/MRI], and positron emission tomography [PET], “have remade the relationship between the seeable and the unseen” (846).” By imaging the body in new ways, Gilroy proposes, emergent technologies that allow the body to be viewed on increasingly minute scales “impact upon the ways that embodied humanity is imagined and upon the status of bio-racial differences that vanish at these levels of resolution” (846). This scalar movement ever inwards and downwards became especially evident in the advancements of molecular biology. Between 1990 and 2003, the Human Genome Project mapped the first human genome using new gene sequencing technology. Their study concluded that there is no scientific evidence that supports the idea that racial difference is encoded in our genetic material. Once and for all, or so we thought, biological conceptions of race were disproved as a scientifically valid construct. In this scalar movement beyond Euclidean anatomy, as Gilroy discerns, the body ceases to delimit “the scale upon which assessments of the unity and variation of the species are to be made” (845). In other words, we have departed from the perceptual regime that once overdetermined who could be deemed ‘human’ at the scale of the body.

Rehearsing this argument is not meant to suggest that racism has been eclipsed by innovations in technology, or that racial classifications do not remain persistently visible. Gilroy (“Race and Racism in ‘The Age of Obama’”), along with his critics, make clear that the “normative potency” of biological racism retains rhetorical and semiotic force within contemporary culture. Efforts to resuscitate research into race’s biological basis continue to appear in scientific fields (Saini), while the ongoing deaths of black people at the hands of police, or the increase in violent assaults against East Asian people during the Corona virus pandemic, demonstrate how racism is obstinately fixed within our visual regime. Gilroy suggests, however, that while the perceptual scales of race difference remain entrenched, these expressions of racialism are inherently insecure and can be made to yield, politically and culturally, to alternative visions of non-racialism. To combat the emergent racism of the present, this vision suggests, we must look beyond the perceptual-anatomical scales of race difference that defined the modern episteme. Having “let the old visual signifiers of race go”, Gilroy directs attention to tasks of doing “a better job of countering the racisms, the injustices, that they brought into being if we make a more consistent effort to de-nature and de-ontologize ‘race’ and thereby to disaggregate raciologies” (839).

Attending to these tasks of intervention requires that we keep in mind the myriad ways in which the residual traces left by older racial regimes subtly insinuate the functions of newly emergent “post-visual” technologies. As Alexandra Hall and Louise Amoore observe, the nineteenth century ambition to dissect the body, and thus lay bare its hidden truths, also “reveal a set of violences, tensions, and racial categorizations which may be reconfigured within new technological interventions and epistemological frameworks” (451). Referencing contemporary virtual imaging devices which scan and render the body visible in the theatre of airport security, Hall and Amoore suggest that new ways of visualizing, securitizing, and mapping the body draw upon the old-age racial fantasy of rendering identity fully transparent and knowable through corporeal dissection. While the anatomical scales of racial discourse have not been wholly untethered from the body, the ways in which race, or its 21st century successor is being rendered in new perceptual formats, remains an urgent question.

‘Racial formations as data formations’

Beyond anatomical scales of race discourse, there is a sense that race is being remade not within extant contours of the body’s visibility, but outside corporeal recognition altogether. If the inward direction towards the hidden racial truths of the human body defined the logics and aesthetics of our former racial regime, how might we think about the 21st century avalanche of data and analytic technologies that increasingly determine life chances in an interconnected, yet deeply inequitable world? Can it be said that our current racial regime has reversed racialism’s inward march, now exerting upwards and outwards pressures into a globalised regime of “big data”?

Big data, broadly understood, refers to data that is large in volume, high in velocity, and is provided in a variety of formats from which patterns can be identified and extracted (Laney). “Big”, of course, evokes a sense of scalar magnitude. For data scholar Wolfgang Pietsch, “a data set is ‘big’ if it is large enough to allow for reliable predictions based on inductive methods in a domain comprising complex phenomena”. Thus, data can be considered ‘big’ in so far as it can generate predictive insights that inform knowledge and decision-making. Growing ever more prevalent across major industries such as medical practice (Rothstein), warfare (Berman), criminal justice (Završnik) and politics (Macnish and Galliot), data acquisition and analytics increasingly forms the bedrock of not only the global economy, but domains of human experience.

Big data technologies are often claimed to be more truthful, efficient, and objective compared to the biased and error-prone tendencies of human decision-making. Its critics, however, have shown this assumption to be outrightly false – particularly for people of colour. Safiya Noble’s Algorithms of Oppression highlights cases of algorithmically driven data failures which underscore the ways in which sexism and racism are fundamental to online corporate platforms like Google. Cathy O’Neil’s Weapons of Maths Destruction addresses the myriad ways in which big data analytics tend to disadvantage the poor and people of colour under the auspice of objectivity. Such critiques often approach big data through the lens of bias – either bias embedded in views of the dataset or algorithm creator, or bias ingrained in the data itself. In other words, biased data will subsequently produce biased outcomes – garbage in, garbage out. Demands for inclusion or “unbiased data”, however, often fail to address the racialised dialectic between inside and outside, human and Other. As Ramon Amaro argues, “to merely include a representational object in a computational milieu that has already positioned the white object as the prototypical characteristic catalyses disruption superficially” (53). From this perspective, the racial other is positioned in opposition to the prototypical classification, which is whiteness, and is thus seen as “alientated, fragmented, and lacking in comparison” (Amaro 53). If the end goal is inclusion, Amaro follows, what about a right of refusal to representation? This question is particularly pertinent in a context where inclusion also means exposure to heightened forms of surveillance for racialised communities, particularly in the context of policing (Lee and Chin).

Relatedly, the language of bias, inclusion, and exclusion does not account for the ways in which big data analytics are producing new racial classifications emerging not from data inputs, but within correlative models themselves. As Thao Than and Scott Wark suggest, “the application of inductive techniques to large data sets produces novel classifications. These classifications conceive us in new ways – ways that we ourselves are unable to see” (3). Following Gilroy’s idea that changes in perceptuality led by the technological revolution of the 21st century require a reimagination of race, or a repudiation of it altogether, Than and Wark claim that racialism is no longer solely predicated on visual hierarchies of the body, but rather “emerges as an epiphenomenon of automated algorithmic processes of classifying and sorting operating through proxies and abstractions” (2). This phenomenon is what they term racial formations as data formations. That is, racialisation shaped by the non-visible processing of data-generated proxies. Drawing from examples such as Facebook’s now disabled “ethnic affinity“ function, which classed users by race simply by analysing their behavioural data and proxy indicators, such as language, ‘likes’, and IP address – Than and Wark show “that in the absence of explicit racial categories, computational systems are still able to racialize us” – though this may or may not map onto what one looks like (3). While the datafication of racial formations may deepen already-present inequalities for people of colour, these formations have a much more pernicious function: the transformation of racial category itself.

Can these emergent formations culled from the insights of big data be called ‘race’, or do we need a new kind of language to account for technologically induced shifts in racial perception and scale? Further, are processes of computational induction ‘racialising’ if they are producing novel classifications which often map onto, but are not constrained by previous racial categories? As Achille Mbembe notes, these questions must also be considered in the context of 21st globalisation and the encroachment of neoliberal logics into all facets of life, such that “all events and situations in the world of life can be assigned a market value” (Vogl 152). Our contemporary context of globalised inequality is increasingly predicated on what Mbembe describes as the “universalisation of the black condition”, whereby the racial logics of capture and predication which have shaped the lives of black people from the onset of the transatlantic slave trade, “have now become the norm for, or at least the lot of, all of subaltern humanity” (Mbembe 4). Here, it is not the biological construct of race per se that is activated in the classifying logics of capitalism and emergent technologies, but rather, the production of “categories that render disposable populations disposable to violence” (Lloyd 2). In other words, 21st century racialism is circumscribed by differential relations of human value determined by the global capitalist order. Nonetheless, these new classifications retain the pervasive logic of difference and division, reconfiguring the category of the disentitled, less-than-human Other in new formations. As Mbembe suggests, neither “Blackness nor race has ever been fixed”, but rather reconstitutes itself in new ways (6). In the next section, I turn to predictive policing technology to parse the ways in which data regimes are mapping new terrains upon which racial formations are produced and sustained.

The Problem of Prediction: Data-led policing in the U.S.

Multiple vectors of racialism, both old and new, visual and post-visual, large and small-scale, play out in the optics of predictive policing technology. Predictive policing software operates by analysing vast swaths of criminological data to forecast when and where a crime of a certain nature will take place, or who will commit it. The history of data collection is deeply entwined with the project of policing and criminological knowledge, and further, the production of race itself. As Autumn Womack shows in her analysis of “the racial data revolution” in late nineteenth century America, "data and black life were co-constituted in the service of producing a racial regime” (15). Statistical attempts to measure and track the movements of black populations during this period went hand in hand with sociological and carceral efforts to regulate and control black life as an object of knowledge. Policing was and continues to be central to this disciplinary project. As R. Joshua Scannell powerfully argues, “Policing does not have a “racist history.’ Policing makes race and is inextricable from it. Algorithms cannot ‘code out’ race from American policing because race is a policing technology, just as policing is a bedrock racializing technology” (108). Like data, policing and the production of race difference co-constitute one another. Predictive policing thus cannot be analysed without accounting for entanglements between data, carcerality, and racialism.

Computational methods were integrated into American criminal justice departments beginning in the 1960’s. Incited by America’s “War on Crime”, the densification of urban areas following the Great Migration of African Americans to Northern cities, and the economic fall-out from de-industrialisation, criminologists began using data analytics to identify areas of high-crime incidence from which police patrol zones were constructed. This strategy became known as hot spot criminology. By 1994, the New York City Police Department (NYPD) had integrated CompStat, the first digitised, fully automated data-driven performance measurement system into its everyday operations. CompStat is now employed by nearly every major urban police department in America. Beginning in 2002, the NYPD began using statistical insights digitally generated by CompStat to draw up criminogenic “impact zones” – namely low income, black neighbourhoods – that would be subject to heightened police surveillance. As Brian Jefferson observes, the NYPD’s statistical strategy “was deeply wound up in dividing urban space according to varying levels of policeability” (116). Moreover, impact zones “provided not only a scientific pretext for inundating negatively racialized communities in patrol units but also a rationale for micromanaging them through hyperactive tactics” such as stop-and-frisk searches (Jefferson 117). Between 2005 and 2006, the NYPD conducted 510,000 stops in impact zones – a 500% increase from the year before. The analytically derived "impact zone” can thus be understood as a bordering technology – one that sorts and divides civilian populations from those marked by higher probabilities of risk, and thus suspicion.

Policing has only grown more reliant on insights culled from predictive data models. PredPol – a predictive policing company that was developed out of the Los Angeles Police Department in 2009 – forecasts crimes based on crime history, location, and time of day. HunchLab, the more “holistic” successor of PredPol, not only considers factors like crime history, but uses using machine learning approaches to assign criminogenic weights to data “associated with a variety of crime forecasting models” such as the density of “take-out restaurants, schools, bus stops, bars, zoning regulations, temperature, weather, holidays, and more” (Scannel 117). Here, it is not the omniscience of panoptic vision, or the individualising enactment of power that characterises Hunchlab’s surveillance software, but the punitive accumulation of proxies and abstractions in which “humans as such are incidental to the model and its effects” (Scannel 118). Under these conditions, for example, “criminality increasingly becomes a direct consequence of anthropogenic climate change and ecological crisis” (Scannel 122).

Data-driven policing is often presented as the objective antidote to the failures of human-led policing. However, in a context where black and brown people around the world are historically, and contemporaneously subjected to disproportionate police surveillance, carceral punishment, and state-sponsored violence, input data analysed by predictive algorithms often perpetuates a self-reinforcing cycle through which black communities are circuitously subjected to heightened police presence. As sociologist Sarah Brayne explains, “if historical crime data are used as inputs in a location-based predictive policing algorithm, the algorithm will identify areas with historically higher crime rates as high risk for future crime, officers will be deployed to those areas, and will thus be more likely to detect crimes in those areas, creating a “self- fulfilling statistical prophecy” (109).

Beyond this critical cycle of ‘garbage in, garbage out,’ Than and Wark’s conceptualisation of racial formations as data formations provides insight into the ways in which predictive policing instils racialisation as a post-visual epiphenomenon of data-generated proxies. While the racist outcomes of data-led policing certainly manifest in the lived realities of poor and negatively racialised communities, predictive policing necessarily relies upon data-generated, non-visual proxies of race – postcode, history of contact with the police, geographic tags, distribution of schools or restaurants, weather, and more. Such technologies demonstrate how different valuations of risk that “render disposable populations disposable to violence” are actively produced not merely through historical data, but in the correlative models themselves (Lloyd 2). While these statistically generated “patrol zones” tend to map onto historically racialised communities, this process of racialisation does not necessarily correspond to the visual, or phenotypic signifiers of race. What emerges in these correlative models are novel kinds of classifications that arise from probabilistic inferences of suspicion through which subjects – often racial minorities – are exposed to heightened surveillance and violence. As Jefferson suggests, “modernity’s racial taxonomies are not vanishing through computerization; they have just been imported into data arrays” (6). The question remains, as neighbourhoods and ecologies, and those who dwell within them, are actively transcribed into newly ‘raced’ data formations, what becomes of the body in this post-visual shift?

2015

This provocation returns us to American Artist’s video installation, 2015. From the onset of the work, the camera’s objectivity is consistently brought into question. Gesturing towards the frame as an architectural narrowing of positionality, the constricted, stationary viewpoint of the camera fixed onto the dashboard of the police car positions the viewer within the uncomfortable observatory of the surveillant police apparatus. The window is imaged as an enclosure which frames the disproportionate surveillance of black communities by police. The world view here is captured from a single axis, a singular ideological vantage point, as an already known world of city landscape passes ominously through the window’s frame of vision. The frame’s hyper-selectivity, an enduring object of scrutiny in the field of evidentiary image-making, and visuality more broadly, is always implicated in the politics of what exists beyond its view, thus interrogating the assumed indexicality, or visual truth of the moving image.

The frame’s ambiguous functionality is made palpable when the car pulls over to stop. Over the din of a loud police siren, we hear a car door open and shut as the disembodied police officer climbs out of the car to survey the scene. Never entering the camera’s line of vision, the imagined, diegetic space outside the frame draws attention to the occlusive nature of the recorded seen-and heard. As demonstrated in the countless acquittals of police officer’s guilty of assaulting or killing unarmed black people, even when death occurs within the “frame” of a surveillance camera, dash cam or a civilian bystander, this visual record remains ambiguous and is rarely deemed conclusive. Consider the cases of Eric Garner, Philando Castille, or Rodney King, a black man whose violent assault by a group of LAPD officers in 1991 was recorded by a bystander and later used as evidence in the prosecution of King’s attackers. Despite the clear visual evidence of what took place, it was the Barthesian concept of the “caption” – the contextual text which rationalises or situates an image within a given ontological framework – that led to the officer’s acquittal. As Brian Winston notes, “what was beyond the frame containing “the recorded ‘seen-and-heard’” was (or could be made to seem) crucial. This is always inevitably the case because the frame is, exactly, a “frame” – it is blinkered, excluding, partial, limited” (614). This interrogation of the fallacies of visual “evidence” is a critical armature of 2015’s intervention, one that interrogates the underlying assumptions of visuality and perception in surveillance apparatuses, constructing the frame of the police window not as a source of visible evidence, but that which obfuscates, conceals, or obstructs.

Beyond the visual, other lives of data further complicate the already troubled notion of the visible as a stable category. As Sharon Lin Tay argues, “Questions of looking, tracking, and spying are now secondary to, and should be seen within the context of, network culture and its enabling of new surveillance forms within a technology of control.” (568). In other words, scopic regimes that implicitly inform the surveillance context are increasingly subsumed by the datasphere from which multiple stories and scenes may be spun. “Evidence” no longer relies solely on a visual account of “truth”, but rather on a digital record of traces. American Artist’s representation of predictive policing software and technologies of biometric identification alludes to the scope in which data is literally superimposed onto our own frame of vision. Predicting our locations, consumption habits, political views, credit scores, and criminal intentions, analytic predictive technologies condense potential futures into singular outputs. As the police car follows the erratic route of its predictive policing software on the open road, we are simultaneously made aware of a future which is already foreclosed.

Here, as 2015 so aptly suggests, the life of data exists beyond our frame of view but increasingly determines what occurs within it. Data is the text that literally “captions” our lives and identities. In zones deemed high-risk for crime by analytic algorithms, subjects are no longer considered civilians, but are hailed and interpolated as criminalised suspects through their digital subjectification. As the police car cruises through Brooklyn’s sparsely populated streets and neighbourhoods in the early morning, footage of people going about their daily business morphs into an insidious interrogation of space and mobility. As the work provocatively suggests, predictive policing construct zones of suspicion and non-humanity through which the body is interrogated and brought into question. In identifying the body as “threat” by virtue of its geo-spatial location in a zone wholly constructed by the racializing history of policing data, the racial body is recoded, not as a necessarily phenotypic entity, but as a product of data. American Artist’s 2015 palpably coneys race as lived through data, shaping who, and what comes into the frame of the surveillant apparatus. The unadorned message: race is produced and sustained as a product of data.

Yet, at the same time, the work’s aesthetic intervention interrogates the enduring physiological nature of visual racialism through the coding of the body. As the police car cruises through the highlighted zones of predicted crime, select passers-by are singled out and scanned by a facial recognition device. This visceral reference to biometric identification – reading the body as the site and sign of identity – complicates the claim that the primordial, objectifying force of visual evidence are transcended by neutral seeming post-visual data apparati. Biometric systems of measurement, such as facial templates or fingerprint identification, are inherently tied to older, eighteenth and nineteenth century colonial and ethnographic regimes of physiological classification that aimed to expose a certain truth about the racialized subject through their visual capture. Contemporary biometric technologies, as Simone Browne argues, retain the same systemic logics of their colonial predecessors, “alienating the subject by producing a truth about the racial body and one’s identity (or identities) despite the subject’s claims” (110). It has been repeatedly shown, for example, that facial recognition software demonstrates bias against subjects belonging to specific racial groups, often failing to detect or misclassifying darker-skinned subjects, an event that the biometric industry terms a “failure to enrol” (FTE). Here, blackness is imaged as outside the scope of human recognition, while at the same time, black people are disproportionately subjected to heightened surveillance by global security apparatuses. This disparity shows that while forms of racialisation are increasingly migrating to the terrain of the digital, race still inheres, even if residually, as an epidermal materialisation in the biometric evidencing of the body.

In American Artist’s 2015, extant tension between data and the lived, phenotypic, or embodied constitution of racialism suggests that these two racializing formats interlink and reinforce each other. By evidencing the racial body, on one hand as a product of data, and on the other, an embodied, physiological construction of cultural and scientific ontologies of the Other, American Artist makes visible the contemporary and historical means through which race is lived and produced. By calling into question the visual and digital ways the racial body is made to evidence its being-in-the-world, Artist challenges and disrupts the evidentiary logics of surveillance apparatuses – that being, what Catherine Zimmer describes as the “production of knowledge through visibility” (428). By entangling racializing forms of surveillance within a realist documentary-like coded format, American Artist calls into question what it means to document, record, or survey within the frame of moving images. As data increasingly guides where we go, what we see, and whose bodies come into question, claims on the recorded seen and heard, as well as the digitally networked, must continually be interrogated. In the context of our current democratic crisis, where the volatile distinctions between “fact” and “fiction” have produced a plethora of unstable meanings, American Artist’s artistic 2015 is a prime example of emergent activist political intervention that interrogates the underlying assumption of documentary objectivity in both cinematic and data-driven formats, subverting the racial logics that remain imbricated within visual and post-visual systems of classifications.

Conclusion

This article explores the shifting terrain of racial discourse in the age and scalar magnitude of big data. Drawing from Paul Gilroy’s periodisation of racialism from Euclidian anatomy of the 19th century to the genomic revolution of the 1990’s, I show that race has always been deeply entwined with questions of scale and perception. Gilroy observed that emergent digital technologies afford potentially new ways of seeing the body, and subsequently, conceiving humanity in novel scales detached from the visual. Similar insights inform Than and Wark’s prescient account of racial formations as data formations – the idea that race is increasingly being produced as a cultivation of data-driven proxies and abstractions. In the context of ongoing logics of contemporary race, American Artist’s 2015 returns consideration to the ways in which residual, and emergent characteristics of racialism are embedded in everyday systems of predictive policing technology. Through multimedia intervention, Artist’s video work conveys racialism not as a single, static entity, but as a historical structure that mutates and evolves algorithmically across an ever-shifting geopolitical landscape of capital and power. In this instance, American Artist orchestrates one critical means to grasp racialism’s multiple forms, past and present, visual, and otherwise, towards future modalities and determinations not yet realised.

Works cited

Amaro, Ramon. The Black Technical Object: On Machine Learning and the Aspiration of Black Being. Sternberg Press, 2023.

Amoore, Louise. “Biometric Borders: Governing Mobilities in the War on Terror.” Political geography 25.3 (2006): 336–351.

Berman, Eli et al. Small Wars, Big Data: The Information Revolution in Modern Conflict. Princeton University Press, 2018.

Bhutta, Neil, Aurel Hizmo, and Daniel Ringo. “How Much Does Racial Bias Affect Mortgage Lending? Evidence from Human and Algorithmic Credit Decisions,” Finance and Economics Discussion Series 2022-067. Washington: Board of Governors of the Federal Reserve System, 2022.

Brayne, Sarah. Predict and Surveil: Data, Discretion, and the Future of Policing. Oxford University Press, 2020.

Browne, Simone. 2015. Dark Matters: On the Surveillance of Blackness. Duke University Press.

Chun, Wendy Hui Kyong. Discriminating Data: Correlation, Neighborhoods, and the New Politics of Recognition. The MIT Press, 2021.

DiCaglio, Joshua. Scale Theory : a Nondisciplinary Inquiry. University of Minnesota Press, 2021.

Doug Laney. “3D Data Management: Controlling Data Volume, Velocity, and Cariety”, Gartner, File No. 949, 6 February 2001, http://blogs.gartner.com/doug-laney/files/2012/01/ad949-3D-Data-Management-Controlling-Data-Volume-Velocity-and-Variety.pdf.

Gilroy, Paul. “Race Ends Here.” Ethnic and racial studies, vol 21, no. 5, 1998, pp. 838–847.

Gilroy, Paul. “Race and Racism In the Age of Obama”. The Tenth Annual Eccles Centre for American Studies Plenary Lecture given at the British Association for American Studies Annual Conference, 2013.

Jefferson, Brian. Digitize and Punish: Racial Criminalization in the Digital Age. University of Minnesota Press, 2020.

Lloyd, David. Under Representation: The Racial Regime of Aesthetics. New York: Fordham University Press, 2018.

Macnish, Kevin, and Jai Galliott, editors. Big Data and Democracy: Edinburgh University Press, 2020.

Mbembe, Achille. 2013. Critique of Black Reason. Duke University Press.

Melamed, Jodi. 2011. Represent and Destroy: Rationalizing Violence in the New Racial Capitalism . University of Minnesota Press.

Noble, Safiya Umoja. Algorithms of Oppression: How Search Engines Reinforce Racism. New York University Press, 2018

Obermeyer. Ziad et al. “Dissecting racial bias in an algorithm used to manage the health of populations” Science, 2019, pp. 447-453.

O’Neil, Cathy. Weapons of Math Destruction: How Big Data Increases Inequality and Threatens Democracy. Allen Lane, 2016.

Rothstein, M. “Big Data, Surveillance Capitalism, and Precision Medicine: Challenges for Privacy.” Journal of Law, Medicine & Ethics, vol. 49, no. 4, 2021, pp. 666-676..

Saini, Angela. Superior: the Return of Race Science. Beacon Press, 2020.

Scannel, R. Joshua. “This Is Not Minority Report predictive policing and population racism”. Viral Justice: How We Grow the World We Want, edited by Ruha Benjamin. Princeton University Press, 2022, pp. 106-129.

Than, Thao, and Scott Wark. “Racial formations as data formations.” Big Data & Society, 2021, vol. 8, no. 2, pp. 1-5.

Vogl, Joseph.Joseph Vogl. Le spectre du capital. Diaphanes, 2013.

Winston, Brian. “Surveillance in the Service of Narrative”. A Companion to Contemporary Documentary Film, edited by Alexandra Juhasz and Alisa Lebow. John Wiley & Sons, 2015, pp. 611-628.

Womack, Autumn. The Matter of Black Living: the Aesthetic Experiment of Racial Data, 1880-1930. The University of Chicago Press, 2022.

Završnik, Aleš. “Algorithmic Justice: Algorithms and Big Data in Criminal Justice Settings.” European journal of criminology, vol 18, no. 5, 2021, pp. 623–642.

Jack Wilson

Minor Tech and Counter-revolution:

Tactics, Infrastructures, QAnon

Minor Tech and Counter-revolution: Tactics, Infrastructures, QAnon

Abstract

Following repeated assertions by QAnon promoters that to understand the phenomenon one must ‘do your own research’ this article seeks to unpack how ‘research’ is understood within QAnon, and how this understanding is operationalised in the production of particular tools. Drawing on exemplar literature internal to the phenomenon, it examines discourses on question of QAnon’s epistemology with particular reference to the stated purpose of ‘research’ and its difference to an allegedly hegemonic (or ‘mainstream’) episteme. The article then turns to how these discourses are operationalised in the research tools QAnon.pub and QAgg.news (‘QAgg’). Finally, it concludes by way of a reflection on how QAnon’s aggressively counter-revolutionary strategies and infrastructures can trouble the concept of the ‘minor’ in minor tech.

Introduction

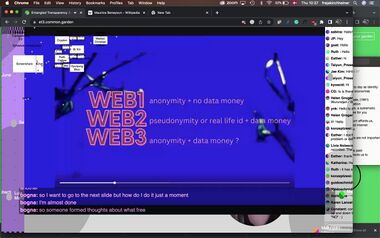

For the large part, the contributions to this issue have discussed instances of ‘minor tech’ that offer creative and necessary inverventions in tactics and infrastructures that are–in their deployment by big tech–exploitative, exclusionary, and often environmentally catastrophic. As such, the impression of minor tech may well be that its ‘small’ or ‘human’ scale necessarily precludes such tactics and technologies’ use in the service of a reactionary political project. Nevertheless, this article argues that QAnon can be understood as an assemblage of ‘minor techs’: small-scale contrarian practices and infrastructures whose very granularity produces the conditions for the aggregation that is known as ‘QAnon’ to occur and mutate from the cryptic missives of one ‘anon’ among many on 4chan’s /pol/ board in late 2017; to–in 2023–a global phenomenon with ominous implications for the question of post-truth’s effects on contemporary cultural and political life (see Rothschild; Sommer, Trust the Plan).

Andersen and Cox open this issue quoting Deleuze and Guattari’s definition of ‘minor literature’ as characterised by “the deterritorialization of language, the connection of the individual to a political immediacy, and the collective arrangement of utterance” (18). They go on to suggest that minor tech’s politics of scale potentially offer an analogous operation with regard to the production of autonomous – potentially revolutionary – spaces for marginalised groups. It is in this sense that this article’s contention regarding QAnon’s being a minor tech arises. Specifically, it is in the injunction to ‘do your own research.’ Among QAnon’s myriad factions the statement is a veritable refrain that characterises involvement in the phenomenon as more than simply believing its conspiratorial worldview, but rather participating in its production by investigating its veracity for oneself and, by implication, arriving at similar conclusions. While there has been some scholarly research into various aspects of QAnon’s participatory culture (de Zeeuw and Gekker; Kir et al.; Marwick and Partin; See), how this is conceptualised and enabled within the phenomenon through minor tech tactics and infrastructures remains comparatively understudied.

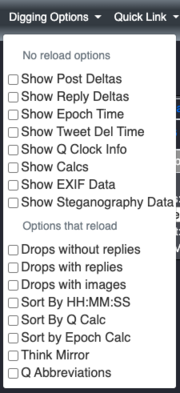

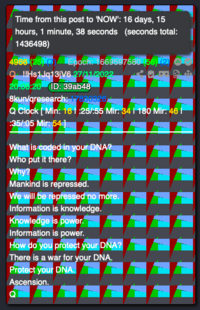

This article, accordingly, seeks to unpack how ‘research’ is understood within QAnon, and how this understanding is operationalised in the production of particular tools. Drawing on exemplar literature internal to the phenomenon, it will first examine discourses surrounding the question of QAnon’s inverted epistemology with particular reference to the stated purpose of ‘research’ and its perceived difference to an allegedly hegemonic (or ‘mainstream’) episteme. Following this analysis of QAnon’s internal discourses on the matter of ‘research,’ the discussion will then turn to how these discourses are reflected and enacted in the ‘Q Drop’ aggregators QAnon.pub and QAgg.news (‘QAgg’). Q Drops are QAnon subjects’ term for the ambiguous dispatches made by the eponymous, mysterious figure known as ‘Q’ which form the ur-text of the phenomenon. While there is a certain consistency to the Q Drops insofar as they are concerned with the actions of Donald Trump and his allies against the nefarious ‘Deep State’ or ‘Cabal’ who are alleged to have undermined the former party’s efforts to ‘Make America Great Again,’ they are also characterised by an extreme degree of vagueness which demands epistemic work on the part of the QAnon subject.

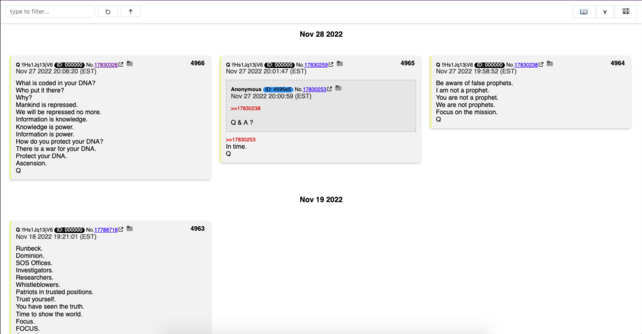

Since these materials have been posted exclusively to anarchic and unarchived image boards – first 4chan, then 8kun (formerly 8chan) – Q Drop aggregators scrape, archive, and afford users means do ‘research’ with the Q Drops. A notable feature of the Q Drop aggregators is their increasing complexity over time: where QAnon.pub (established March 2018) is effectively wholly concerned enabling the analysis of the content of Q Drops, QAgg (April 2019) mines Drops for actual and esoteric meta-data, supposedly encrypted additional information that pushes the Q Drops’ semiosis to the point of potential exhaustion. The increasing granularity of how Q Drops are interpreted and applied in the ‘research’ afforded by QAgg specifically reflects a broader tendency towards the molecular intensification of QAnon subjects and speaks to a broader argument regarding precisely the ‘minor’ quality of QAnon’s technical apparatuses that make its reactionary manifestation at scale possible.

‘Research’ at a human scale

Despite the centrality of Q to the worldview of QAnon, they do not present themselves as, nor are they taken to be, a prophet bearing a revealed truth. Instead, Q characterises themselves as instructing their followers in what might be understood as a degraded form of ideology critique wherein the asserted reality of the phenomenon’s worldview is rendered visible in the mediatic traces of the world:

You are being presented with the gift of vision.

Ability to see [clearly] what they've hid from you for so long [illumination].

Their deception [dark actions] on full display.

People are waking up in mass.

People are no longer blind. (Q Drop 4550, square brackets in original)[1]

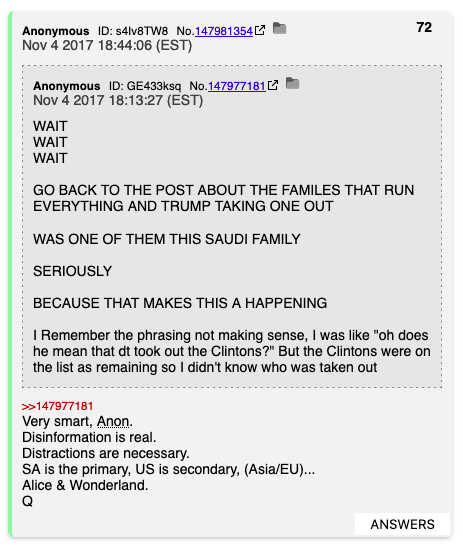

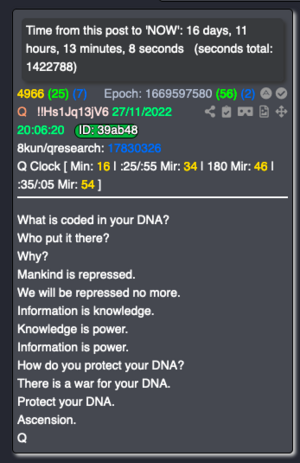

'Research’ in QAnon is typically characterised by the mapping of contemporary events to the content or metadata of Q Drops by QAnon subjects, with Q occasionally intervening to correct or confirm QAnon subjects’ inferences and findings. Beyond the initial series of Drops where Q claimed that the arrest of Hillary Clinton was imminent – “between 7:45 AM - 8:30 AM EST on Monday - the morning on Oct 30, 2017” (1) – they very rarely make explicit claims as to the future. Instead, Q tends to vaguely intone on contemporary events or ‘correct’/‘verify’ the findings of QAnon subjects. Here, the failed prediction that was the basis of the very first Q Drop is illustrative. While Hillary Clinton was not arrested, the 2017-2019 Saudi Arabian purge began some days after the first Q Drop (namely, on the 4th of November 2017) with a wave of arrests across the Gulf State. In response, a user of 4chan’s /pol/ board posited that it was in fact this event that Q was in fact alluding to (fig. 1). Per Q in their reply to said user: “Very smart, Anon. Disinformation is real. Distractions are necessary” (72). In essence, the first Q Drops were framed as about the then-forthcoming purge, with the discussion of Hillary Clinton being misdirection to run cover for this operation.

Rather than mirroring the didactic pedagogy and unaccountable epistemic hierarchies of the so-called "mainstream media" (Pamphlet Anon and Radix 93), Q is seen as instructing QAnon subjects in a particular way of seeing and mode of inquiry. As the QAnon promoter David Hayes (a.k.a. ‘Praying Medic’) explains in a passage on this topic that is worth quoting at length:

Q uses the Socratic method. Using questions, he’ll examine our current beliefs on a given subject. He'll ask if our belief is logical, then drop hints about facts we may not have uncovered, and suggest an alternative hypothesis. He may provide a link to a news story and encourage us to do more research. The information we need is publicly available. We're free to conduct our research in whatever way we want. We're also free to interpret the information however we want. We must come to our own conclusions because Q keeps his interpretations to a minimum. For many people, researching for themselves, thinking for themselves, and trusting their own conclusions can make following Q difficult. When you’re accustomed to someone telling you what to think, thinking for yourself can be a painful adjustment. (Hayes 17)

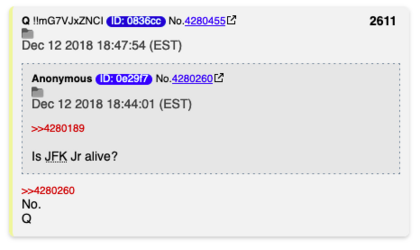

While Q possesses a certain authority in terms of having the proverbial ‘last word’ with regard to the work of QAnon subjects, this is not exercised in most cases. It is always the QAnon subject’s obligation to ‘do your own research’ – which is, again, the mapping of contemporary events to the content, metadata, and meaning of the Q Drops. Indeed, despite Q’s ostensibly ‘final’ authority with regard to what is and is not an aspect of the phenomenon’s worldview, there are some instances where the figure has been effectively ignored due to the salience of the individual and their ‘research.’ For example, there many QAnon subjects who believe that the deceased son of the assassinated president John F. Kennedy – John F. Kennedy Jr. – is still alive (Sommer, “QAnon, the Pro-Trump Conspiracy Theorists, Now Believe JFK Jr. Faked His Death to Become Their Leader”), and this despite Q’s explicit denial thereof (fig. 2).

QAnon’s fetishization of individual interpretation as well as the salience of primary sources therein has been identified by Marwick and Partin as an instance ‘scriptural inference.’ Tripodi characterises scriptural inference as a prevailing epistemology among religious and right-wing actors in the United States wherein “those who believe in the truth of the Bible approach secular political documents (e.g., a transcript of the president’s speech or a copy of the Constitution) with the same interpretative scrutiny” (6). While Tripodi notes an analogous compulsion among their research subjects to “do their own research” (6), the extent to which epistemic authority is located in the ‘researching’ subject is unclear. In comparison, the centrality of a particular QAnon subject’s ‘research’ to themselves is an explicit refrain: even prominent QAnon promoters describe their findings with this qualification (Colley; Dylan Louis Monroe at Conscious Life Expo 2019; The Fall of the Cabal).

The overarching impression is that ‘research’ within QAnon is not so much about working towards the production of a body of knowledge that all QAnon subjects can agree upon rather than it is concerned with the proliferation of many personalised ‘truths.’ As in the Q Drops, as in the mediatic traces of the world–all are material available for the individual’s interpretation of one in terms of the other towards the production of increasingly personalised and complex ‘research.’ That these conditions work to produce an worldview that is characterised at the micro- and macro-levels by a swirling mess of complexity and contradictions is simply taken as evidence of the phenomenon’s good health; there is no ‘groupthink’ (Hayes).

Nevertheless, the phenomenon’s internal heterogeneity all points to the asserted ‘truth’ of QAnon’s worldview, with contrary analysis pathologized as either being the uncritical work of someone in the thrall of the ‘mainstream’ episteme or deliberately malicious efforts of the Deep State and its agents. Actual difference – being that which is definitionally other to a subject or particular set of conditions – is not tolerated within QAnon. What is true of the phenomenon’s epistemology is also true of its worldview and accounts for QAnon’s hostility towards minoritarian movements. To the QAnon subject, America being made ‘Great Again’ is a fantasy of fascist restoration, a perverted ‘end of history’ wherein the conditions for the different or new are permanently evacuated.

Scales of ‘Research’: the Q Drop Aggregators

While QAnon has arguably always been a cross-platform phenomenon (Zadrozny and Collins), the figure of Q themselves is closely associated with image boards 4chan, 8chan, and 8kun. Indeed, the primary mechanism though which Q’s dispatches are considered authentic is by way of their posting exclusively to the image board they call home–presently 8kun–with their current tripcode.[2] While providing a basically adequate means for performing the apparent provenance for these ambiguous missives, this practice of “no outside comms” (465) beyond the anarchic and ephemeral image boards where Q dwells generates a certain tension with the previously discussed injunction to ‘do your own research.’ In this respect, it is necessary to explain the technical conditions within which QAnon emerged in order to understand the parallel development of archival infrastructures.

4chan was launched in 2003 by the fourteen-year-old Christopher Poole as an English language clone of the Japanese board 2chan.ner (Beran). Given the lack of server space initially available to him, Poole elected to limit the number of threads on any given board and archive nothing. Combined with the site’s default username–‘Anonymous’–and a laissez-faire moderation policy, Poole somewhat unwittingly created the conditions for the emergence of an extraordinarily dynamic and culturally significant milieu whose influence can be seen across digital culture as well as in the strategies of activist groups ranging from Occupy Wall Street, to Anonymous, to – more recently – the ‘alt-right’ and QAnon (Coleman; Phillips et al.). 8chan, meanwhile, was launched in 2013 by Fredrick Brennan and became prominent among 4chan’s more reactionary users in 2014 as a ‘free speech’-guaranteeing clone of 4chan, which at the time had banned any mention of the misogynist witch-hunt known as ‘gamergate’ (Marwick and Lewis; Sandifer). In 2019, after the respective perpetrators of the Christchurch, Poway, and El Paso massacres associated themselves with the site, it was removed from the clearnet for approximately a month before relaunching as ‘8kun’ (Hagen et al.; Keen)

While the ephemerality of 4chan was initially a means to manage limited computational resources, this quality has since come to define the culture of ‘the chans’–the global array of websites with similar affordances and user cultures that include, but are not limited to 4chan, 8chan, and 8kun (see De Keulenaar). For instance, 4chan and 8chan are both characterised by the strictly limited number of active threads (200 for 4chan, 355 for 8chan/kun) with only the most commented upon (or ‘bumped’) persisting until they too – after running out of steam or reaching the boards’ ‘bump limit’ (300 and 750 comments, respectively) – are inevitably ‘pruned’ (permanently deleted) to make way for new posts. Given the febrile rate of posting among both boards’ extensive userbases, any given thread has a strikingly short lifespan in comparison to mainstream social media platforms with a significant amount of content being pruned within a matter of minutes and the longest-lived threads persisting for only a handful of hours (Hagen, “Rendering Legible the Ephemerality of 4chan/Pol/ – OILab”).

The prevailing view on this scalar compression of many users into an extremely limited discursive environment is that it applies a kind of Darwinian pressure on the content posted to the boards (Moot’s Final 4chan Q&A). As there are no archives, content only endures if it survives this evolutionary stress and enters the embodied memory of the userbase. Although there are user-developed mechanisms of reposting to ‘counter’ this ephemerality and allow discussions to continue over longer periods of time than might be possible otherwise – for instance, through the practice of creating and maintaining ‘general threads’ on a particular topic that are revived at the point of their reaching the boards’ bump limit –these nevertheless still primarily deal in the repetition of content by reiterating a particular line of argument, reposting a particular meme, etc., rather than archiving it (OILab). Indeed, despite the fact that there are extensive and accessible archives of these boards, these infrastructures do not really figure in the discourses of 4chan and 8chan/kun as it is occurring, or indeed, could not even be implemented given the feverish temporality of posting (Hagen, “‘Who Is /Ourguy/?”).

As a result, if one were to look for the Q Drops in-situ, they would find them spread across three websites, containing seven boards therein (chronologically: /pol/ on 4chan, then /CBTS/, /TheStorm/, /GreatAwakening/ on 8chan, and /QResearch/, /patriotsfight/ and /projectdcomms/ on 8kun) with local archives for these boards ranging from non-existent (4chan) to extremely patchy and unsearchable (8chan/kun), to say nothing about the veritable ocean of unrelated and likely obscene content that one would also encounter. Under such conditions, QAnon’s ur-text appears as a distributed and disjointed series of image board posts with unstable authorship. Q drop aggregators intervene at this point, collecting the (currently 4,966) Q drops into an online archive and presenting them as a coherent corpus through which ‘research’ can occur. QAnon subjects do not need to navigate the hostile interface and culture of a chan board–and few do (see “Do You Believe in Coincidences?”).[3] Instead, they can ‘research’ Q Drops at their leisure on the aggregators. Additionally, the Q Drop aggregators afford the circulation of Drops across the wider web, including the major corporate platforms despite QAnon’s ostensive ‘deplatforming’ after its pandemic-facilitated ‘boom’ and the events of 6 January 2020 (O’Connor et al.). In essence, by enabling distributed small scale acts of individual‘research’ on the part of QAnon subjects, Q drop aggregators facilitate the production of QAnon at the immense scale that the phenomenon has achieved. The paper will now proceed with a comparative analysis of how two major Q Drop aggregators (QAnon.pub and QAgg) make their materials available for users’ ‘research’ efforts.

QAnon.pub